The rapid advancement of artificial intelligence (AI) has brought about incredible opportunities in various fields. From healthcare to education, AI is transforming the way we live and work. However, with this progress comes the responsibility to ensure that AI is developed and used ethically and safely. One crucial aspect of AI safety is addressing potentially harmful requests, particularly those of a sexually suggestive nature.

This article will delve into the importance of AI safety guidelines, explore the challenges posed by sexually suggestive requests, discuss ethical considerations surrounding such interactions, and highlight appropriate conversation topics for AI assistants. We will also examine the limitations of AI technology and emphasize the need for responsible AI development and usage.

AI Safety Guidelines

Robust AI safety guidelines are essential to mitigate potential risks associated with AI systems. These guidelines should encompass a wide range of considerations, including:

- Bias Mitigation: AI models can inherit biases present in the data they are trained on. It is crucial to identify and address these biases to ensure fairness and prevent discriminatory outcomes.

- Transparency and Explainability: AI decision-making processes should be transparent and understandable to humans. This allows for scrutiny, accountability, and the identification of potential issues.

Data Privacy and Security: AI systems often handle sensitive personal data. Strict measures must be in place to protect this data from unauthorized access, use, or disclosure.

Safety Testing and Monitoring: Thorough testing and ongoing monitoring are essential to identify and address potential safety hazards before they can cause harm.

Sexually Suggestive Requests

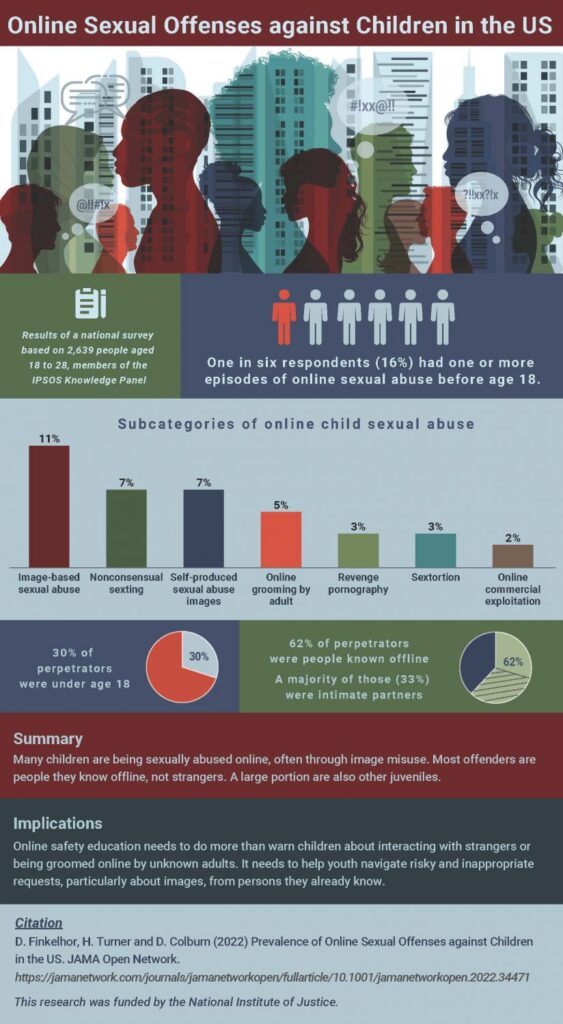

Sexually suggestive requests pose a significant challenge for AI safety. These requests can range from explicit inquiries about sexual acts to more subtle attempts to elicit inappropriate responses.

AI systems are not equipped to handle such requests responsibly. Providing explicit or suggestive content can contribute to the normalization of harmful behavior and potentially expose users to inappropriate material. It is crucial to establish clear boundaries and mechanisms to prevent AI systems from engaging in sexually suggestive conversations.

Impact on Users

Responding to sexually suggestive requests can have detrimental effects on users, particularly vulnerable individuals such as children. Exposure to such content can lead to:

- Psychological Harm: Sexualized content can be distressing and traumatizing, leading to anxiety, depression, and other mental health issues.

- Normalization of Harmful Behavior: Frequent exposure to sexually suggestive content can desensitize users and contribute to the acceptance of harmful behavior.

- Increased Risk of Exploitation: AI systems that engage in sexually suggestive conversations could be exploited by malicious actors to groom or exploit vulnerable individuals.

Ethical Considerations

Addressing sexually suggestive requests raises several ethical considerations:

- Respect for Human Dignity: AI systems should treat all users with respect and dignity, avoiding interactions that are demeaning or exploitative.

- Responsibility of Developers: AI developers have a responsibility to design and implement safeguards to prevent their systems from engaging in harmful or inappropriate behavior.

- User Consent and Control: Users should have control over the types of interactions they engage in with AI systems and be able to set boundaries.

Appropriate Conversation Topics

AI assistants are best suited for engaging in conversations on a wide range of appropriate topics, such as:

- Factual Information: Providing summaries of events, definitions of terms, and answers to factual questions.

- Creative Writing: Assisting with brainstorming ideas, generating story plots, or writing poems.

- Educational Support: Helping with homework assignments, explaining concepts, or providing study resources.

- Entertainment: Telling jokes, playing games, or recommending books or movies.

AI Assistant Limitations

It is essential to recognize the limitations of AI technology. AI assistants are not human beings and lack the capacity for:

- Emotional Intelligence: AI systems cannot understand or respond to human emotions in a meaningful way.

- Moral Reasoning: AI lacks the ability to make ethical judgments or understand complex moral dilemmas.

- Original Thought: AI models generate responses based on patterns in their training data and are not capable of independent thought or creativity.

Conclusion

Ensuring AI safety is paramount as we continue to develop and integrate AI systems into our lives. Addressing sexually suggestive requests is a crucial aspect of this endeavor. By establishing clear guidelines, promoting ethical development practices, and focusing on appropriate conversation topics, we can harness the power of AI while mitigating potential risks. It is essential to remember that AI technology should be used responsibly and ethically to benefit society as a whole.